Azure’s PromptFlow: Deploying LLM Applications in Production

In the ever-changing world of Artificial Intelligence (AI), new tools and frameworks are regularly introduced to tap into the capabilities of Large Language Models (LLMs) Among the notable advancements, Azure's PromptFlow emerges as a noteworthy solution designed to simplify the engineering of prompts and manage AI projects efficiently. Whether you're a machine learning enthusiast, a data scientist, or an AI application developer, the integration of PromptFlow within your toolkit can significantly elevate the caliber of your projects. This guide aims to delve into the core features of PromptFlow, its relevance in LLM application development, and the wide array of opportunities it opens up.

Understanding Azure's PromptFlow within the LLM Ecosystem

Azure's PromptFlow, a dedicated development environment within the Azure ML suite of services, is meticulously crafted to streamline the journey from prototype to deployment of LLM applications. It facilitates experimentation with prompt and model variants, integrates with service infrastructure, and deploys LLM apps seamlessly. Its capabilities resonate with the challenges of developing with LLMs, such as multi-modal deployments, balancing quality, cost, and inference speed for different use-cases.

Creating, Testing, and Evaluating Prompts

Prompt engineering is pivotal in LLM applications. PromptFlow distinguishes itself by offering an easy-to-use interface for designing, testing, and evaluating prompts in real time. This becomes especially important in customer service automation scenarios where the LLM has to interpret queries from customers and route them to the appropriate department or provide immediate solutions. Imagine a customer asking, 'How do I reset my password?' The PromptFlow interface would allow developers to iteratively test and fine-tune the prompt that translates this query into a specific action, like sending a password reset link or directing the customer to the tech support team. This iterative optimization facilitated by PromptFlow aligns seamlessly with the necessity for a quick and efficient feedback loop in the LLM application development process.

PromptFlow not only allows for mass testing across multiple inputs but also leverages a variety of metrics such as classification accuracy, Relevance, and Groundedness to assess the effectiveness of prompts. Groundedness, in particular, gauges how well the AI's generated responses align with the data from the input source. It's a crucial metric, especially if you're concerned that your application might produce information that can't be verified against your AI's training data.

In evaluating Groundedness, answers are cross-referenced with a user-defined "ground truth" source, like your input database. Even if an answer is factually correct, it's considered "ungrounded" if it can't be verified against this source. A high Groundedness score indicates that the information in the AI's responses can be validated by your input source or database. On the other hand, a low score suggests that the AI might be drawing solely from its pre-trained knowledge, which may not necessarily match the specific context or domain of your input. The Groundedness scale ranges from 1, indicating unverifiability, to 5, signifying perfect alignment with the source.

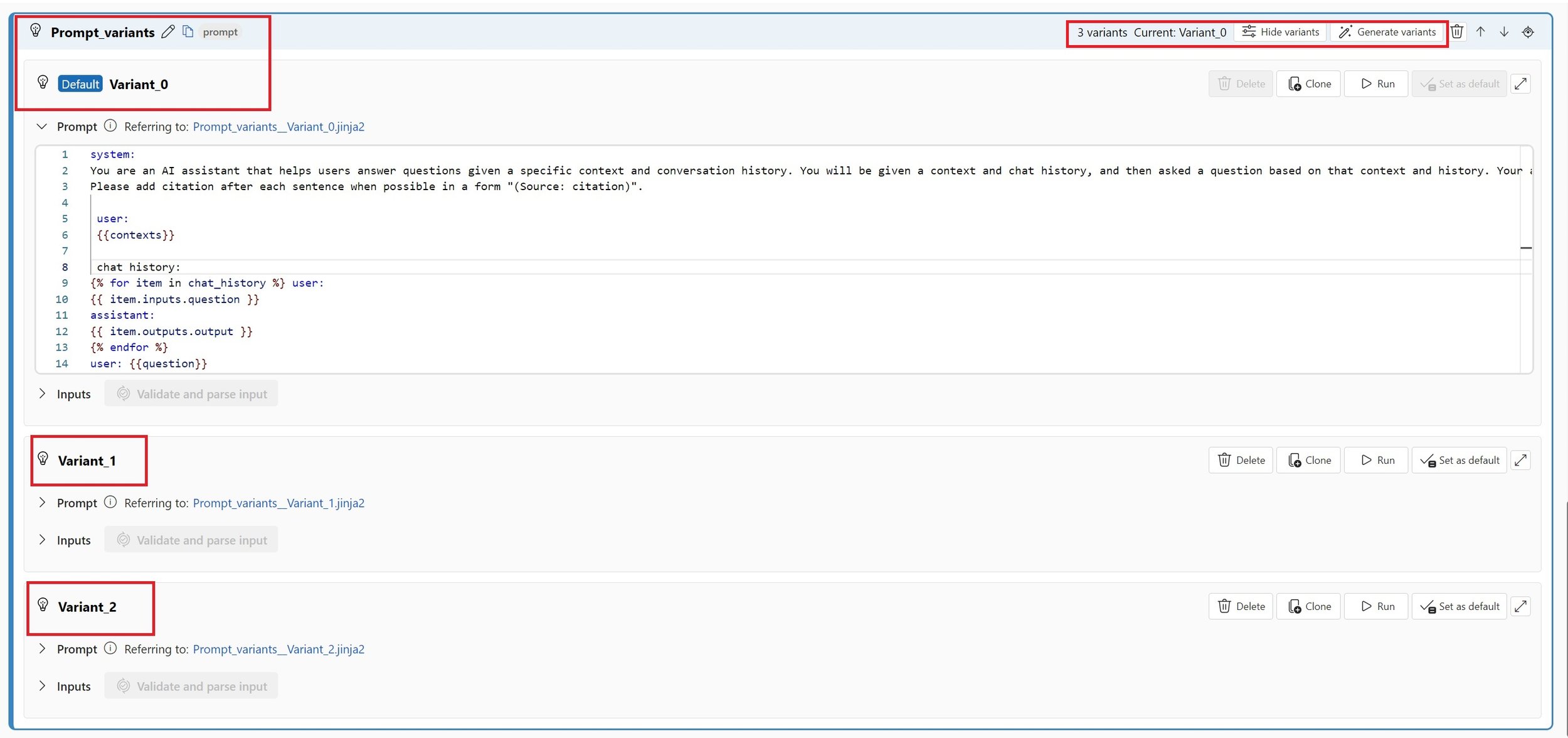

The ability to create multiple variations of prompts is also invaluable for applying the technology in diverse use-cases. This phased approach, from simple to more complex models, optimizes both cost and performance.

Building Complex Pipelines and Task Composability

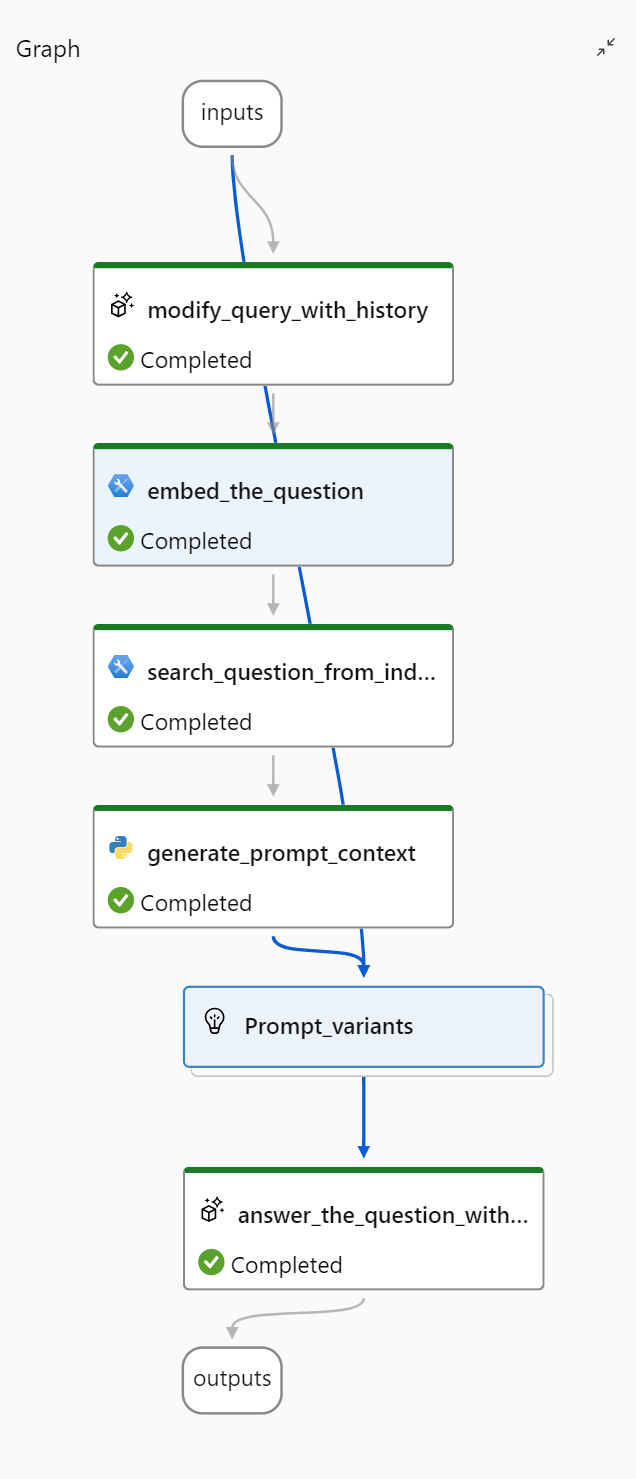

PromptFlow simplifies the orchestration and management of intricate pipelines, aligning with the requirements of task composability in LLM applications. This is especially relevant for tasks like using a Retriever-Augmented Generation (RAG) model to enrich text-based queries with relevant information, and then generating a natural language response based on that enriched data.

It facilitates setting up an end-to-end LLMOps (Large Language Model Operations) pipeline that can create can create an online endpoint in your Azure Machine Learning workspace, deploy your language model there, and then manage traffic routing to that endpoint. The configuration of this pipeline has a direct impact on factors like latency, cost, and resource allocation, as it entails the deployment and real-time management of large language models.

Harnessing Retrieval Augmented Generation (RAG):

Azure's PromptFlow is well-equipped to automate Retrieval Augmented Generation, facilitating a deeper engagement with your data. By simply uploading or directing your data to blob storage, PromptFlow can autonomously initiate a RAG pipeline to chunk, embed your documents, and store the embeddings in a vector store like Azure Cognitive Search for later retrieval. This feature is instrumental for use-cases like querying or "chatting" with your data, significantly enhancing the way you interact with information. It creates a seamless bridge to navigate through large datasets, retrieve relevant information, and engage in insightful dialogues with your data.

This RAG architecture is centred around the PromptFlow as the main orchestrator. Users initiate their journey by entering queries into the StreamlitApp Frontend web UI, which then communicates with the PromptFlow workflow online endpoint. These queries are enhanced with historical context, embedded, and then matched against indexed documents for accuracy. The PromptFlow orchestrator interacts with the GPT-4 model to fetch relevant responses.

The entire workflow is supported by Virtual Machines for data processing, with data securely stored in designated containers and keys safeguarded in a Key Vault. Deployments leverage Docker containerization, with images stored in the Azure Container Registry and subsequently deployed via Azure DevOps Pipelines to an Azure Application Service, ensuring a seamless and robust user experience.

Deployment, Customization, and Service Infrastructure Integration

PromptFlow shines in facilitating a smooth deployment process while allowing extensive customization. It creates an endpoint in Azure ML, providing a URL to access your model. Furthermore, it enables integration with service infrastructure, a feature that resonates with the need for tools and plugins in LLM applications, to extend capabilities and address inherent shortcomings like cut-off dates with pre-trained LLMs or lack of arithmetic skills.

Managed online endpoints in Azure operate on robust CPU and GPU machines, offering a scalable and fully managed solution that can enhance resource efficiency and potentially lower costs. This setup also alleviates the burden of configuring and maintaining the foundational deployment infrastructure.

Authentication during deployment is key, you can choose between key-based authentication or Azure Machine Learning token-based authentication. These settings could have implications on security and potentially on latency, depending on the authentication mechanisms and permissions setup.

Endpoint configuration is another crucial aspect. You can configure basic settings of an endpoint, decide whether to deploy a new endpoint or update an existing one, and set up authentication and identity types. These configurations could impact endpoint latency and resource management, as they dictate how the endpoint interacts with Azure resources and handles requests.

In the complex ecosystem of Large Language Model application development, human involvement remains a critical factor. PromptFlow enhances this by offering a detailed analysis of output and assessments at each development juncture, fulfilling the requirement for solid evaluation mechanisms.

Additionally, the built-in chat system enables developers to conduct tests throughout the workflow or to thoroughly vet the entire solution prior to its deployment.

All things considered, Azure's PromptFlow is a versatile solution that seamlessly integrates into the LLM application development cycle, skillfully navigating the multifarious challenges in prompt crafting and project oversight. Its robust features cater to a wide spectrum of AI enthusiasts, offering a scaffold to build, evaluate, and deploy sophisticated LLM applications.

In the realm of LLM technology, we have barely scratched the surface. Yet, it's clear that Generative AI holds the promise of revolutionizing our work and learning environments. At Advancing Analytics, we are exhilarated to lead the charge in this dynamic sector. To learn more about implementing LLMs in your enterprise, download our LLM Workshop flyer or get in touch with us today.