Data Lakehouse vs Data Warehouse in Microsoft Fabric

If you have had a play around with Microsoft Fabric you will have seen that there are lots of different ways to get to your end goal. If you haven’t heard about Fabric, you can check out our intro blog post which is here!

Microsoft have angled Fabric to be persona driven but it is still important to look deeper and to highlight the technical reasons for picking a specific tool. A question that has come up a lot is whether to pick a Lakehouse or a Data Warehouse. The team developing your platform is still an important consideration but if you have a team/s that can work with both, then where do you stand?

Fabric is full of different features, and it can get complex very quickly. In this blog post I will go through how to decide whether to choose a Lakehouse or a Data Warehouse artifact. Both artifacts read Delta, so how do they differ?

The best place to start is to look at what each of the artifacts are and what they can do.

Lakehouse

At Advancing Analytics we are big fans of the Lakehouse architecture, and we are happy that Microsoft has considered this as a part of Fabric. The Lakehouse artifact can be used to store any data type, whether that is structured or unstructured and it is stored by default in the Delta format. The Lakehouse artifact is supported by Notebooks which lets us interact with our data in a number of different languages, for example Scala, PySpark, Spark SQL or R. Data can be stored as a folder, files, or databases and tables.

Data Warehouse

There are two types of Data Warehouse available within Microsoft Fabric; SQL Endpoint and Synapse Data Warehouse.

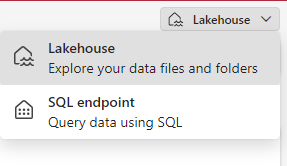

The SQL Endpoint warehouse is an auto-generated artifact which is created when you create a Lakehouse artifact. This can be accessed by clicking into your Lakehouse and navigating to the top right and selecting ‘SQL endpoint’ from the view in the corner.

The view switcher can be used to change between a Lakehouse view or a SQL endpoint view for your data.

This is a read-only view of your data, any modification to your data still needs to be made through notebooks here. This endpoint can be used to query data as well as define views and permissions.

The Synapse Data Warehouse is a SQL engine which is used to query and transform data in our Data Lake (OneLake) and has full transactional, DDL and DML query support. Data here also uses the Delta format in the same way the Lakehouse artifact does but an important difference is that that you would need to be using structured data. Working with Data Warehouse data happens in SQL which gives us transactional support and compatibility with existing T-SQL tools.

From here onwards I will be talking about the Synapse Data Warehouse when I say the ‘Data Warehouse Artifact’.

Side by Side Comparison of Features

Microsoft has created a great resource which lists each of the properties of our artifacts, I have left discussion of the Power BI Datamart out of this blog post.

| Data Warehouse | Lakehouse | Power BI Datamart | |

|---|---|---|---|

| Data volume | Unlimited | Unlimited | Up to 100 GB |

| Type of data | Structured | Unstructured, semi-structured, structured | Structured |

| Primary developer persona | Datawarehouse developer, SQL engineer | Data engineer, Data scientist | Citizen developer |

| Primary developer skill set | SQL | Spark (Scala, PySpark, Spark SQL, R) | No code, SQL |

| Data organized by | Databases, schemas and tables | Folders and files, databases and tables | Database, tables, queries |

| Read operations | Spark, T-SQL | Spark, T-SQL | Spark, T-SQL, Power BI |

| Write operations | T-SQL | Spark (Scala, PySpark, Spark SQL, R) | Dataflows, T-SQL |

| Multi-table transactions | Yes | No | No |

| Primary development interface | SQL scripts | Spark notebooks, Spark job definitions | Power BI |

| Security | Object leve (table, view, function, stored procedure, etc.), column level, row level, DDL/DML | Row level, table level (when using T-SQL), none for Spark | Built-in RLS editor |

| Access data via shortcuts | Yes (indirectly through the lakehouse) | Yes | No |

| Can be a source for shortcuts | Yes (tables) | Yes (files and tables) | No |

| Query across items | Yes, query across lakehouse and warehouse tables | Yes, query across lakehouse and warehouse tables; query across lakehouses (including shortcuts using Spark) | No |

Which one should I pick?

Working from the features table above we can see that there are a quite a few differences that set the artifacts apart.

Team Skillset

Despite there being technical reasons to choose a particular approach the team’s skillset should be considered. The Data Warehouse is aimed at those with more traditional SQL development skills whereas the Lakehouse is geared towards those who use Spark. Considering your teams skillsets is an important part of the decision making process.

Type of Data

The type of data that we are working with is the most stand-out of the above differences. Data comes in many forms but if you find yourself working with data types such as JSON then it makes much more sense to be working within the Lakehouse and utilising Notebooks within your workflow. Thinking about the future of your data is useful as well; Will your data format change? Will the volume of your data greatly increase? If the answer to these questions is yes, then consider the Lakehouse.

Write Operations (Languages) and Primary Development Interface

Once you pick a Lakehouse or Data Warehouse artifact, you must use what you have chosen for a given process unless you recreate it again. You can mix and match between Lakehouses and Data Warehouses for different processes, however. We can see from the table that the Data Warehouse artifact is SQL based whereas the Lakehouse is Spark based. Your processes might already exist within stored procedures within an existing SQL Data warehouse and so migrating them to a Data Warehouse artifact may make more sense if this is still deemed an appropriate solution.

Migration from Synapse

Migrating from an existing Synapse environment is another great reason to pick the Data Warehouse artifact. Otherwise, moving to a Lakehouse artifact would cost you time to rebuild things with notebooks.

Conclusion

Putting Microsoft’s persona approach aside for a moment, we can still see that there are a number of technical arguments to consider. Both artifacts use Delta under the hood but we can see that, ultimately, it still comes down to what you are working with and what you need to achieve when making that decision.

The persona approach is still important, however, as we need the relevant skills in a team to be able to engineer and support the solutions that are created. There is no limitation to picking just one of these options, there may be different teams which with their different preferences and skillsets can mix and match based upon their own requirements. For example, using a Lakehouse artifact to process your data in Spark but surfacing your data with the SQL endpoint.

If you want to read some more of our content on Fabric, check out our other blog posts:

10 Amazing Features of Microsoft Fabric

If you want to talk further about whether a Lakehouse or Warehouse is best for your needs, feel free to get in touch and have a chat with us.