Customer Retention with FLAML: Predict Churn with a Few Simple Lines of Code

Customer retention refers to the ability of a business to retain its customers and prevent them from switching to a competitor. In simple terms, customer retention is about keeping the customers you already have and making sure they are satisfied with your product or service. It is a crucial aspect of any successful business that can lead to several benefits, including increased revenue, cost savings, and improved brand loyalty. As shown by research from Frederick Reichheld of Bain & Company, even a 5% increase in customer retention can lead to significant profits for a business.

Machine learning models can be successfully developed to detect churn. This is a well-known fact. However, building machine learning models requires in-depth knowledge and expertise. This is where FLAML comes in. In this article, we will delve into FLAML, a tool that enables users to quickly and easily build machine learning models.

What is FLAML?

FLAML is short for Fast and Lightweight Automated Machine Learning library. It is an open-source Python library created by Microsoft researchers in 2021 for automated machine learning (AutoML). It is designed to be fast, efficient, and user-friendly, making it ideal for a wide range of applications.

What sets FLAML apart from other automated machine learning libraries?

There are several excellent AutoML libraries available, however, there are a few reasons why I would choose to use FLAML as my AutoML library of choice. These are:

FLAML not only finds accurate but high-performing ML models with low computational resources automatically. It is recognised to outperform top-ranked AutoML libraries as well as a commercial cloud-based AutoML service.

FLAML is integrated with Ray Tune, a Python library that accelerates hyperparameter optimisation processes across multiple GPUs or machines. This means you can improve model performance, have faster training times, and more efficient use of resources.

FLAML is lightweight, easy to use, and can be integrated into existing machine learning pipelines. Check out MLFlow Recipes, which is part of MLflow 2.0 for example, where it leverages FLAML for AutoML.

To summarise, FLAML is fast, scalable and very efficient!

How does it work?

FLAML AutoML works by experimenting with various models and fine-tuning hyperparameters to build the best ML model possible. This often involves trial and error to find a hyperparameter configuration with good performance, which can be a computationally expensive and time consuming process.

FLAML uses state-of-the-art hyperparameter optimisation algorithms. It leverages the structure of the search space to optimise for both cost and model performance simultaneously. It contains two novel methods developed by Microsoft Research:

Cost-Frugal Optimisation (CFO): This is a method for searching in a cost-effective way. The search begins with the low-cost initial point and gradually moves to the high-cost region if needed, all the while optimising the given goal (like model loss or accuracy). It is a local search method that leverages a randomised direct search method with adaptive step size and random restarts. However, with local search methods, this has the drawback of getting stuck at a locally optimal point.

BlendSearch: This is an extension of CFO that combines the frugality of CFO and the space exploration ability of global search methods such as Bayesian optimisation, making it less susceptible to getting stuck at local minima.

By combining these approaches, FLAML can perform automated hyperparameter optimisation significantly faster than traditional approaches.

For more information on the technical details of CFO and BlendSearch, check out these papers:

Frugal Optimization for Cost-related Hyperparameters. Qingyun Wu, Chi Wang, Silu Huang. AAAI 2021.

Economical Hyperparameter Optimization With Blended Search Strategy. Chi Wang, Qingyun Wu, Silu Huang, Amin Saied. ICLR 2021.

We will see how this works by going through an example.

A walkthrough: Here's an example of how easy FLAML could be used to build a churn prediction model:

Let’s take a look at how to build a machine learning model using FLAML to predict churn in Databricks. For this walkthrough, we will be looking at a Telecom Customer Churn dataset that is available from Kaggle. The objective is to be able to predict customer churn by assessing their propensity or risk to churn. In this dataset of over 7000 customers, 26% of them have churned. This is a large percentage! Bearing in mind that it is more expensive to acquire new customers than it is to keep existing ones, it is important for a business to predict customer churn.

As with most machine learning model development, the Databricks notebook is divided into the following sections:

🔎 Basic EDA

⚙ Feature Preprocessing

⭐ Dataset Preparation

🔥 FLAML automl

👩🏽💻 Retrieve and Analyse the outcomes of AutoML.fit()

💥 Compute Predictions of Testing Dataset

📝 Log history

The entire notebook and can be downloaded from this link.

Using a few lines of code, a machine learning model can be developed with relatively high accuracy. In this example, we built a machine learning model in 60 seconds that achieved an accuracy of 82%! That’s pretty impressive.

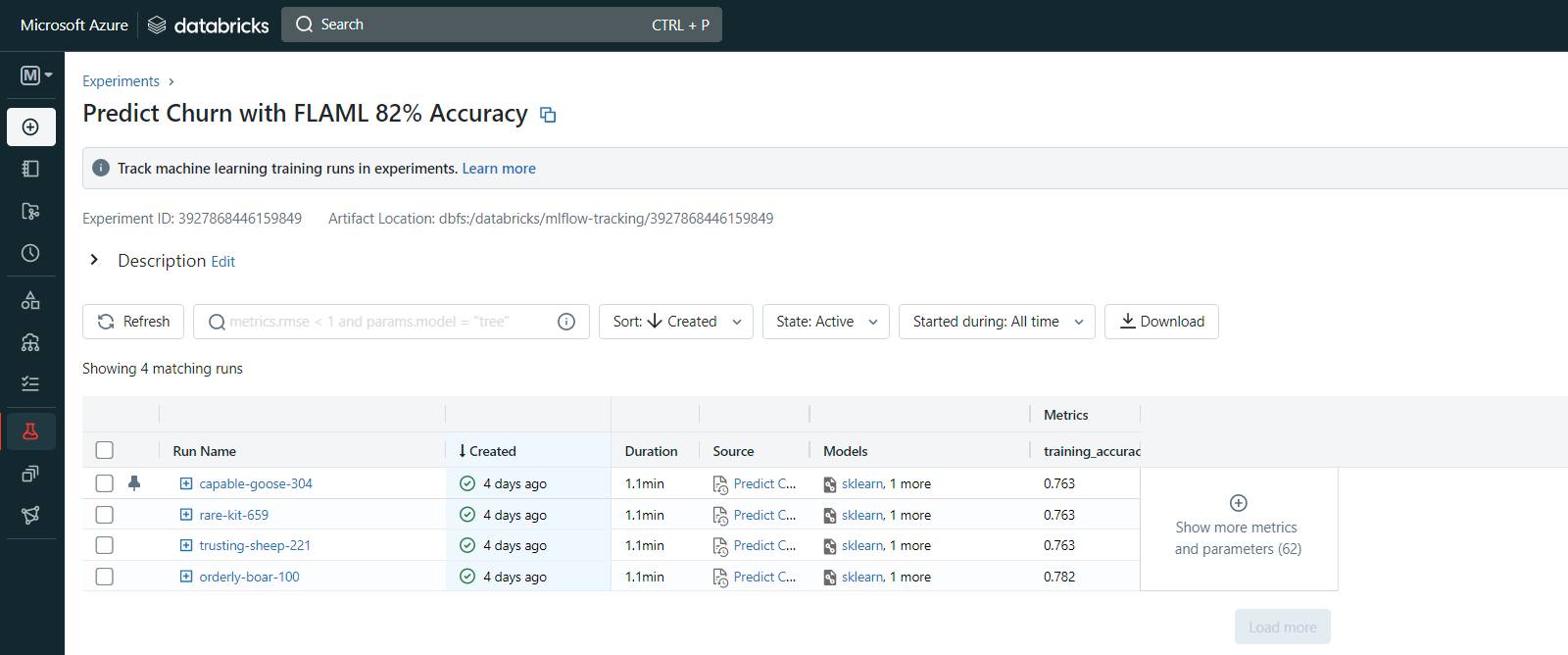

By using Databricks we can leverage MLflow which not only makes it easy to compare and analyse the results of multiple runs through its intuitive user interface, but also offers the convenience of quickly deploying trained models with just a few clicks. This further streamlines the model development and deployment process, saving time and effort. However, if you are using FLAML without MLflow, configurations for each run can also be saved into a log file by specifying a ‘log_file_name’.

And that’s it. Hopefully, you have now seen how easy it is to build a machine learning model to predict churn using FLAML. It can also be used to solve other types of machine learning problems, including time series forecasting, regression, and natural language processing tasks.

What makes FLAML stand out?

FLAML have incorporated a new feature called “zero-shot AutoML” also known as "no-tuning" AutoML. Zero-shot AutoML uses hyperparameter configurations that are trained offline on similar types of datasets to automatically generate hyperparameter configurations for a new dataset, without the need for manual tuning. This can save time and resources by eliminating the need for manual tuning of hyperparameters and can improve the performance of machine learning models. This is similar to zero-shot learning, a technique used in computer vision where a model can classify objects or scenes that it has not been trained on.

What other features are the FLAML team working on?

There are tons of upcoming features that have been announced by the FLAML team. Have a look at the Roadmap for Upcoming Features. I am looking forward to seeing the update on Feature Selection, where FLAML optimally selects the best features for the ML model and seeing explainability baked into FLAML.

Conclusion

FLAML is a fairly new and highly effective AutoML tool that can produce a machine learning model quickly with the best set of hyperparameters. This, however, is just a small portion of the whole machine learning cycle. A data scientist still has to deal with the data pre-processing, feature engineering, and model deployment stages of the machine learning lifecycle. When data scientist uses FLAML, they can spend more time preparing data and deploying models. FLAML is a great way to get started building machine learning models quickly and with little effort.

FLAML is not your typical AutoML solution, it gives you the flexibility to make it as simple as you would like it to be (generating a model in a few lines of code), or as complex, as you need it to be (allowing for fine-grained control over the machine learning process). You can easily use FLAML to generate machine learning models to solve various types of machine learning problems ranging from classification, and regression to NLP tasks. Whether you're a researcher, a developer, or just someone looking to get started with machine learning, FLAML is worth checking out.