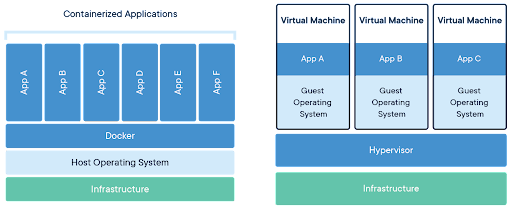

Docker and virtual machines (VMs) are powerful cloud computing technologies that help companies optimize their compute resources through virtualization, or the process of creating a virtual representation of something. In this case, the thing being virtualized is a computer.

The key difference is that VMs virtualize the computer hardware, while Docker virtualizes only the operating system. Another way to put it is that Docker allows multiple workloads to run on a single OS instance, while VMs allow the running of multiple OS instances. Since they’re complementary technologies, Docker is commonly used with VMs to optimize cloud-computing resources and performance (more on that later).

To better understand the difference between Docker and VM technology, how they’re used, and why they’re beneficial to companies, let’s first define our terms.

What Is Docker and What Are Containers?

Docker is an open source platform for building and running applications inside of containers. Containers are standardized units of software containing all the code and dependencies—including binaries, libraries, and configuration files—needed for an app to run.

Although container technology has been around for a long time, Docker’s debut in 2013 made containerization mainstream. Docker is often used in conjunction with container orchestration platforms such as Kubernetes. Docker is considered a type of container “runtime,” or program that runs the container on a host operating system. Other container runtimes include containerd, Windows Containers, and run

Originally developed by Linux, containers have become an industry standard for application and software development. They solve the issue of environment inconsistency, allowing developers to write code locally (say, on their laptop) and then deploy it anywhere, in any environment, without having to worry about the bugs and other issues caused by environmental differences such as versions, permissions, and database access.

What Is a Virtual Machine?

A VM is a computing resource that uses software, instead of a physical computer, to run programs and deploy apps. Through VMs, one or more virtual “guest” machines can run on a physical “host” machine. For example, a virtual MacOS machine can run on a physical PC. However, a hypervisor is needed to virtually share the resources of the host machine.

What Is a Hypervisor?

A hypervisor, also known as a virtual machine monitor (VMM), is software that creates and runs virtual machines. A hypervisor enables a single host computer to support multiple guest VMs by virtually sharing resources such as memory and processing. Hypervisors can run several virtual machines on one physical machine’s resources, which allows for more efficient use of physical servers. IT teams can shift workloads and allocate networking, memory, storage, and processing resources across multiple servers as needed.

Figure 1: Docker architecture vs. VM architecture

Source: Docker

Docker vs. VM: Advantages and Disadvantages

By encapsulating software into virtual self-contained units, a Docker container allows you to run an app in a virtual environment without the heavy resource requirements of a hypervisor. With a container, instead of having to virtualize an entire OS and server, you only need to virtualize the software and hardware dependencies required for a particular app to run while using the OS kernel of the host machine. For this reason, Docker containers are far less taxing on your physical servers than VMs. Containers can also launch and scale in a matter of seconds because they don’t need their own full operating system.

That said, VMs offer certain advantages over Docker. Since they’re truly independent of the host machine, VMs are very isolated and secure. They also offer robust management tools and can make all OS resources available to all apps. And since they can run different operating systems on one physical machine, they offer cost savings as well by not requiring the use of multiple physical servers.

Docker vs. VM Use Cases

Both Docker and VMs make running multiple applications in production easier. The difference is that where VMs help you optimize your infrastructure resources by maximizing the number of machines you can get out of your hardware and software, Docker containers help you maximize your development resources by enabling things like microservices and DevOps best practices.

Since many cloud providers depend on VM technology to provide users with their personal servers, running Docker containers in the cloud usually means running them on top of VMs that have been provisioned for you. Hence, it’s not so much a question of Docker or VMs but of Docker and VMs or just VMs alone.

The goal should be to reduce computing costs by using container technology to improve deployment density and make applications more lightweight and portable so you can run multiple applications on a single VM rather than having to use multiple VMs.

You should use Docker containers with VMs to:

- Maximize the number of apps running on a single OS kernel

- Deploy multiple instances of a single app

- Prioritize compute and storage resources

You should use VMs alone for:

- Prioritizing isolation and security

- Running multiple apps with different OS dependencies on a single server

- Running an app that needs all the resources and functionalities of an OS

In short, if you have monolithic applications that you don’t need to refactor into microservices, using VMs alone should work. However, Docker containers (with VMs) are better for developing and deploying newer, cloud-native applications on a microservices architecture.

Get the Most Out of Container Orchestration with Portworx

Docker containers work best when used in conjunction with container orchestration tools.

Pure Service Orchestrator™ is a container orchestration tool that delivers persistent storage resources to containerized applications. Pure Service Orchestrator combines elastic scaling, smart provisioning, and transparent recovery to deliver containers as a service. It also integrates seamlessly with Kubernetes and other container orchestration platforms to provide:

- Automated storage delivery on demand

- Policy-based provisioning

- Elastic scaling across all arrays, including hybrid cloud

- Intelligent container deployment across file and block arrays

- Enterprise-grade resiliency with automated failover and self-healing data access integrity

Learn more about Pure Service Orchestrator.

![]()