This blog on Dremio S3 and NFS integration was originally published on Medium. It has been republished with the author’s credit and consent.

In this blog, I’ll go over how you can use fast NFS and S3 from Pure Storage to power your Dremio Kubernetes deployments.

Dremio Distributed Storage

First, I change the distStorage section in the values.yaml file to reflect my S3 bucket, access and secret keys, as well as the endpoint of the Pure Storage® FlashBlade®:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 |

<span style="font-weight: 400;">distStorage</span><span style="font-weight: 400;">:</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">type</span><span style="font-weight: 400;">: aws</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">aws</span><span style="font-weight: 400;">:</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">bucketName</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"dremio"</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">path</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"/"</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">authentication</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"accessKeySecret"</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">credentials</span><span style="font-weight: 400;">:</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">accessKey</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"PSFB...JEIA"</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">secret</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"A121...JOEN"</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">extraProperties</span><span style="font-weight: 400;">: |</span> <span style="font-weight: 400;"> <property></span> <span style="font-weight: 400;"> <name>fs.s3a.endpoint</name></span> <span style="font-weight: 400;"> <value>192.168.2.2</value></span> <span style="font-weight: 400;"> </property></span> <span style="font-weight: 400;"> <property></span> <span style="font-weight: 400;"> <name>fs.s3a.connection.ssl.enabled</name></span> <span style="font-weight: 400;"> <value>false</value></span> <span style="font-weight: 400;"> </property></span> <span style="font-weight: 400;"> <property></span> <span style="font-weight: 400;"> <name>dremio.s3.compat</name></span> <span style="font-weight: 400;"> <value>true</value></span> <span style="font-weight: 400;"> </property></span> <span style="font-weight: 400;"> <property></span> <span style="font-weight: 400;"> <name>fs.s3a.path.style.access</name></span> <span style="font-weight: 400;"> <value>true</value></span> <span style="font-weight: 400;"> </property></span> |

With the above in place, I deploy to my Dremio namespace using the following helm command:

|

1 |

<span style="font-weight: 400;">~</span><span style="font-weight: 400;">/dremio-cloud-tools/</span><span style="font-weight: 400;">charts/dremio_v2$ helm install dremio ./ -f values.yaml -n dremio</span> |

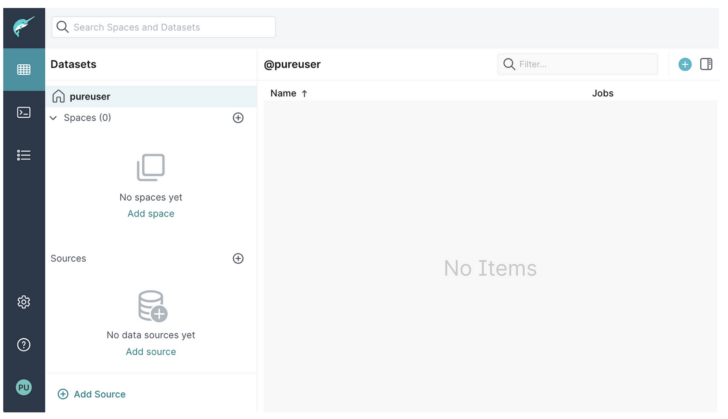

Once the pods are up and running, I can connect to the webUI on the service port, and after creating the admin account, I’m presented with the Dremio interface:

Let’s take a moment to check what has been created in the S3 bucket I specified for the distStorage:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 |

<span style="font-weight: 400;">$ aws s3api list-objects-v2 --bucket dremio --profile fbstaines03 --</span><span style="font-weight: 400;">no</span><span style="font-weight: 400;">-verify-ssl --endpoint-url=https:</span><span style="font-weight: 400;">//</span><span style="font-weight: 400;">192.168</span><span style="font-weight: 400;">.</span><span style="font-weight: 400;">40.165</span> <span style="font-weight: 400;"> InsecureRequestWarning,</span> <span style="font-weight: 400;">{</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Contents"</span><span style="font-weight: 400;">: [</span> <span style="font-weight: 400;"> {</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"LastModified"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"2023-08-09T15:19:58.000Z"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"ETag"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"d41d8cd98f00b204e9800998ecf8427e"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"StorageClass"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"STANDARD"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Key"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"accelerator/"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Size"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">0</span> <span style="font-weight: 400;"> },</span> <span style="font-weight: 400;"> {</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"LastModified"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"2023-08-09T15:19:58.000Z"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"ETag"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"d41d8cd98f00b204e9800998ecf8427e"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"StorageClass"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"STANDARD"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Key"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"downloads/"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Size"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">0</span> <span style="font-weight: 400;"> },</span> <span style="font-weight: 400;"> {</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"LastModified"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"2023-08-09T15:19:58.000Z"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"ETag"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"d41d8cd98f00b204e9800998ecf8427e"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"StorageClass"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"STANDARD"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Key"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"metadata/"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Size"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">0</span> <span style="font-weight: 400;"> },</span> <span style="font-weight: 400;"> {</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"LastModified"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"2023-08-09T15:19:58.000Z"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"ETag"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"d41d8cd98f00b204e9800998ecf8427e"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"StorageClass"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"STANDARD"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Key"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"scratch/"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Size"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">0</span> <span style="font-weight: 400;"> },</span> <span style="font-weight: 400;"> {</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"LastModified"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"2023-08-09T15:20:43.000Z"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"ETag"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"d41d8cd98f00b204e9800998ecf8427e"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"StorageClass"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"STANDARD"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Key"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"uploads/_staging.dremio-executor-0.dremio-cluster-pod.dremio.svc.cluster.local/"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Size"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">0</span> <span style="font-weight: 400;"> },</span> <span style="font-weight: 400;"> {</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"LastModified"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"2023-08-09T15:20:33.000Z"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"ETag"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"d41d8cd98f00b204e9800998ecf8427e"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"StorageClass"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"STANDARD"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Key"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"uploads/_staging.dremio-executor-1.dremio-cluster-pod.dremio.svc.cluster.local/"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Size"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">0</span> <span style="font-weight: 400;"> },</span> <span style="font-weight: 400;"> {</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"LastModified"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"2023-08-09T15:19:57.000Z"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"ETag"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"d41d8cd98f00b204e9800998ecf8427e"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"StorageClass"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"STANDARD"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Key"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"uploads/_staging.dremio-master-0.dremio-cluster-pod.dremio.svc.cluster.local/"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Size"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">0</span> <span style="font-weight: 400;"> },</span> <span style="font-weight: 400;"> {</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"LastModified"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"2023-08-09T15:19:58.000Z"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"ETag"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"d41d8cd98f00b204e9800998ecf8427e"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"StorageClass"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"STANDARD"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Key"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"uploads/_uploads/"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Size"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">0</span> <span style="font-weight: 400;"> }</span> <span style="font-weight: 400;"> ]</span> <span style="font-weight: 400;">}</span> |

Official documentation: The distributed storage cache location contains accelerator, tables, job results, downloads, upload data, and scratch data. Within my output the uploads/_staging… objects correspond to the nodes deployed for my test Dremio cluster.

Dremio S3 Source

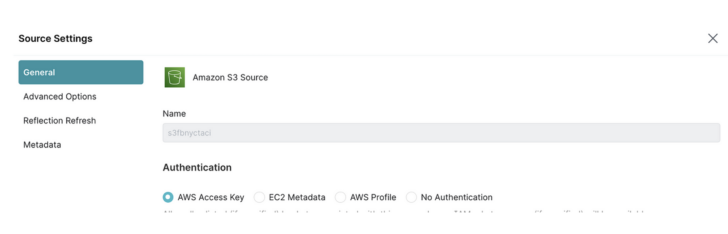

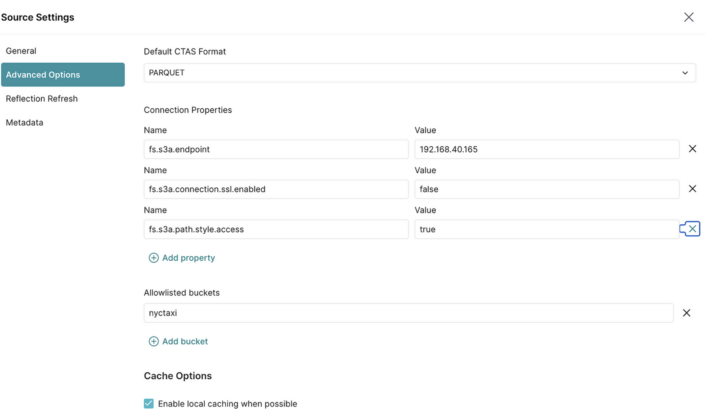

I then add an S3 source and provide my FlashBlade S3 user access and secret keys, as well as the required additional parameters:

Note: I unchecked “encrypt connection” on the S3 General page. Also, fs.s3a.path.style.access can be true|false.\

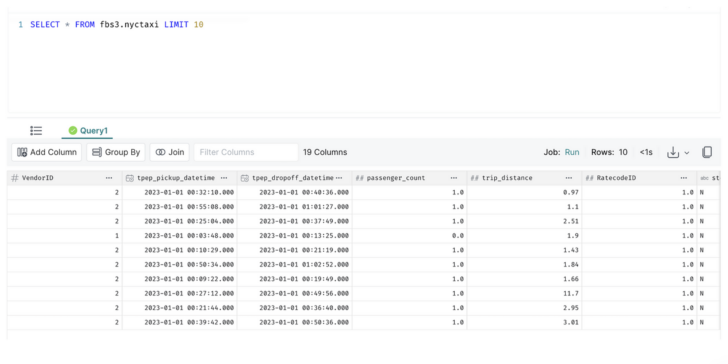

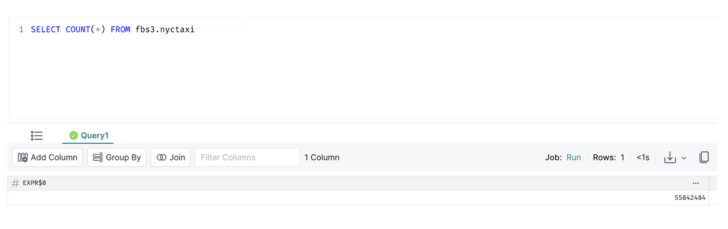

I quickly check the first 10 rows of data with a simple SQL query:

New objects have been created on the distributed storage bucket:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 |

<span style="font-weight: 400;">$ aws s3api list-objects-v2 --bucket dremio --profile fbstaines03 --no-verify-ssl --endpoint-url=https://192.168.40.165</span> <span style="font-weight: 400;">/usr/lib/fence-agents/bundled/urllib3/connectionpool.py:1050: InsecureRequestWarning: Unverified HTTPS request is being made to host </span><span style="font-weight: 400;">'192.168.40.165'</span><span style="font-weight: 400;">. Adding certificate verification is strongly advised. See: https://urllib3.readthedocs.io/en/1.26.x/advanced-usage.html</span><span style="font-weight: 400;">#ssl-warnings</span> <span style="font-weight: 400;"> InsecureRequestWarning,</span> <span style="font-weight: 400;">{</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Contents"</span><span style="font-weight: 400;">: [</span> <span style="font-weight: 400;"> ...</span> <span style="font-weight: 400;"> {</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"LastModified"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"2023-08-09T17:17:25.000Z"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"ETag"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"dc4c0576740d2528518cac2ca40ff85a"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"StorageClass"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"STANDARD"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Key"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"metadata/26752429-ab57-467d-a786-dd6a1c66a8b8/metadata/00000-3d2c0450-2715-45da-a5ba-4779e34801fc.metadata.json"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Size"</span><span style="font-weight: 400;">: 5858</span> <span style="font-weight: 400;"> },</span> <span style="font-weight: 400;"> {</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"LastModified"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"2023-08-09T17:17:24.000Z"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"ETag"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"7e931afe5a5b2a2b6196d69d8b46275a"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"StorageClass"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"STANDARD"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Key"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"metadata/26752429-ab57-467d-a786-dd6a1c66a8b8/metadata/2cf233b4-4655-4598-89c5-94344c7cd0f1.avro"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Size"</span><span style="font-weight: 400;">: 6849</span> <span style="font-weight: 400;"> },</span> <span style="font-weight: 400;"> {</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"LastModified"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"2023-08-09T17:17:25.000Z"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"ETag"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"89feb5b9357dd8ff2d052880fcca72a7"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"StorageClass"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"STANDARD"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Key"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"metadata/26752429-ab57-467d-a786-dd6a1c66a8b8/metadata/snap-7017972300860934048-1-3e6e8b78-baee-4755-8bc4-98b7f20ab28f.avro"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Size"</span><span style="font-weight: 400;">: 3771</span> <span style="font-weight: 400;"> },</span> <span style="font-weight: 400;"> {</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"LastModified"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"2023-08-09T17:21:25.000Z"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"ETag"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"b1a981d365063dc7a3e61d0864747a2f"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"StorageClass"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"STANDARD"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Key"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"metadata/81ceabb1-20ba-427b-a507-f6c2243588a0/metadata/00000-495d50d3-3215-4f70-ae08-d3f181ae32e2.metadata.json"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Size"</span><span style="font-weight: 400;">: 5858</span> <span style="font-weight: 400;"> },</span> <span style="font-weight: 400;"> {</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"LastModified"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"2023-08-09T17:21:25.000Z"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"ETag"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"f40aba5c86cfc4bde487f6640edb724b"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"StorageClass"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"STANDARD"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Key"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"metadata/81ceabb1-20ba-427b-a507-f6c2243588a0/metadata/da2a415e-40be-47d9-ac81-4522bf612928.avro"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Size"</span><span style="font-weight: 400;">: 6849</span> <span style="font-weight: 400;"> },</span> <span style="font-weight: 400;"> {</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"LastModified"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"2023-08-09T17:21:25.000Z"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"ETag"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"df3b02ffce0bb4dfddc7a048fb6c800c"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"StorageClass"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"STANDARD"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Key"</span><span style="font-weight: 400;">: </span><span style="font-weight: 400;">"metadata/81ceabb1-20ba-427b-a507-f6c2243588a0/metadata/snap-3982440809221282401-1-89be0606-7152-4326-96ba-43dd7849b978.avro"</span><span style="font-weight: 400;">,</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">"Size"</span><span style="font-weight: 400;">: 3770</span> <span style="font-weight: 400;"> },</span> <span style="font-weight: 400;">...</span> <span style="font-weight: 400;"> ]</span> <span style="font-weight: 400;">}</span> |

Dremio Metadata Storage

Dremio documentation states that HA Dremio deployments must use NAS for the metadata storage. It also provides guidance on the NAS storage characteristics: low latency, high throughput for concurrent streams as a must-have. This is exactly what Pure Storage FlashBlade sets out to do!

Now the helm chart executor template already assigns a PVC volume for the $DREMIO_HOME/data mount point. In my case, the PVC is being provisioned from FlashBlade NFS storage:

|

1 2 3 4 5 |

<span style="font-weight: 400;"> volumeMounts:</span> <span style="font-weight: 400;"> - name: {{ template </span><span style="font-weight: 400;">"dremio.executor.volumeClaimName"</span><span style="font-weight: 400;"> (list $ </span><span style="font-weight: 400;">$engineName</span><span style="font-weight: 400;">) }}</span> <span style="font-weight: 400;"> mountPath: /opt/dremio/data</span> |

To simulate a shared volume, I change the mountPath line in the template and edit the helm chart values.yaml adding the following additional volume section for the executors:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

<span style="font-weight: 400;">extraVolumes:</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">-</span> <span style="font-weight: 400;">name:</span> <span style="font-weight: 400;">metadremio</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">nfs:</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">server:</span> <span style="font-weight: 400;">192.168.2.2</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">path:</span> <span style="font-weight: 400;">/metadremio</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">extraVolumeMounts:</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">-</span> <span style="font-weight: 400;">name:</span> <span style="font-weight: 400;">metadremio</span> <span style="font-weight: 400;"> </span><span style="font-weight: 400;">mountPath:</span> <span style="font-weight: 400;">/opt/dremio/data</span> |

I check our volume is mounted on our executors:

|

1 2 3 4 5 6 7 8 9 |

<span style="font-weight: 400;">$ kubectl -n dremio </span><span style="font-weight: 400;">exec</span><span style="font-weight: 400;"> -it dremio-executor-0 -- </span><span style="font-weight: 400;">df</span><span style="font-weight: 400;"> -kh</span> <span style="font-weight: 400;">...</span> <span style="font-weight: 400;">192.168.2.2:/metadremio 50G 0 50G 0% /opt/dremio/data</span> <span style="font-weight: 400;">...</span> <span style="font-weight: 400;">I add several more NYCTAXI data set parquet files to my S3 bucket and let Dremio “discover” these additional files. I now have 55,842,484 rows of data.</span> |

After running some queries, the new metadata volume shows an increase in used space:

|

1 2 3 4 5 6 7 |

<span style="font-weight: 400;">$ kubectl -n dremio </span><span style="font-weight: 400;">exec</span><span style="font-weight: 400;"> -it dremio-executor-0 -- </span><span style="font-weight: 400;">df</span><span style="font-weight: 400;"> -kh</span> <span style="font-weight: 400;">...</span> <span style="font-weight: 400;">192.168.40.165:/jbtdremio 50G 149M 50G 1% /opt/dremio/data</span> <span style="font-weight: 400;">...</span> |

Conclusion

That covers the current three possible Dremio integrations with S3 or NFS storage. As shown, Pure Storage FlashBlade provides the performance and concurrency required with seamless S3 and NFS capabilities to power a Dremio environment.

![]()